In this post, we will learn about basic Hyperparameter tuning using GridSearch. Since I want to focus solely on this concept I will skip other pre-processing and feature selection techniques which are also important steps in a Machine Learning pipeline.

import pandas as pd

import numpy as np

import seaborn as sns

import matplotlib.pyplot as plt

from sklearn.datasets import load_wine

data = load_wine()

wine_data = pd.DataFrame(data.data, columns=data.feature_names)

wine_target = pd.DataFrame(data.target, columns=['wine_class'])

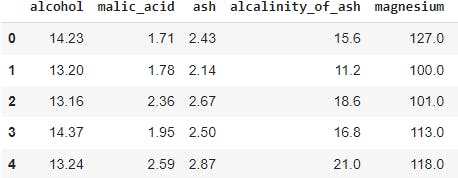

Here's a truncated glimpse of the dataframe

We will split the data into train and test sets so that we can do all of our EDA on the training set and keep the test set untouched.

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(wine_data, wine_target, test_size=0.2, random_state=42)

Let's quickly generate summary statistics to get an overview of our data and find if there are any null values as well.

print(X_train.describe())

print(X_train.info())

alcohol malic_acid ... od280/od315_of_diluted_wines proline

count 142.000000 142.000000 ... 142.000000 142.000000

mean 12.979085 2.373521 ... 2.592817 734.894366

std 0.820116 1.143934 ... 0.722141 302.323595

min 11.030000 0.890000 ... 1.270000 278.000000

25% 12.332500 1.615000 ... 1.837500 502.500000

50% 13.010000 1.875000 ... 2.775000 660.000000

75% 13.677500 3.135000 ... 3.170000 932.750000

max 14.830000 5.800000 ... 4.000000 1547.000000

[8 rows x 13 columns]

<class 'pandas.core.frame.DataFrame'>

Int64Index: 142 entries, 158 to 102

Data columns (total 13 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 alcohol 142 non-null float64

1 malic_acid 142 non-null float64

2 ash 142 non-null float64

3 alcalinity_of_ash 142 non-null float64

4 magnesium 142 non-null float64

5 total_phenols 142 non-null float64

6 flavanoids 142 non-null float64

7 nonflavanoid_phenols 142 non-null float64

8 proanthocyanins 142 non-null float64

9 color_intensity 142 non-null float64

10 hue 142 non-null float64

11 od280/od315_of_diluted_wines 142 non-null float64

12 proline 142 non-null float64

It looks like we are good to go as there are no null values or incompatible data types

Hyperparameter Search

Hyperparameters are features that are intrinsic to the model. For instance, in the case of KNeighbors classifiers number of neighbors and in the case of Decision tree classifier, number of trees is the hyperparameter. These are things that we cannot learn from the data and are unique to the particular model that we would use. Hyperparameters are also found to affect model performance and the way to know what is the best hyperparameters requires experimentation. The process of finding the best value of hyperparameters is called tuning. GridSearch and RandomSearch are common techniques for hyperparameter tuning. For this article, we will look at a very basic type of GridSearch where will only tune 1 parameter the n_neighbbors.

from sklearn.neighbors import KNeighborsClassifier

from sklearn.metrics import accuracy_score

k_values = [1,2,3,4,5,6,7]

mse_values = []

for k in k_values:

knn = KNeighborsClassifier(n_neighbors=k, algorithm='brute')

knn.fit(X_train, y_train)

y_pred = knn.predict(X_test)

mse_values.append(accuracy_score(y_test,y_pred))

print(mse_values)

Output:

[0.7777777777777778, 0.7222222222222222, 0.8055555555555556, 0.75, 0.7222222222222222, 0.7222222222222222, 0.6944444444444444]

Looks like, k=3 performed best for this dataset and higher values than this decreased model accuracy, we can safely choose this. In practice, you will want to use more robust Hyperparameter tuning techniques such as GridSearch and RandomSearch where you can combine lots of different combinations of hyperparameters and evaluate model performance in one go, as we will see in the next post. Stay tuned friends.